Documenting Current and Projected Capabilities and Societal Ramifications of Autonomous Intelligent Systems

2023-2025 - Dr. Bradly Whitaker

The work in this project explores how NASA is implementing ethical and societally aware artificial intelligence (AI) solutions. As noted by IBM, “a tech-centric focus that solely revolves around improving the capabilities of an intelligent system doesn’t sufficiently consider human needs” (https://www.ibm.com/design/ai/ethics/everyday-ethics/). However, little work has been done in compiling information regarding societal ramifications or ethical concerns of using autonomous decision-making systems. Likewise, the capabilities and limitations of state-of-the-art autonomous systems are not well documented. In this project, our multidisciplinary is performing essential background research in ethical AI systems. Our research goal for this project is to prepare review paper manuscripts in two areas of focus: (1) current and projected autonomous performance capabilities and limitations, and (2) societal ramifications of ethical decision-making models.

The first focus area of this research deals with the technical performance capabilities and limitations of AI systems. We investigate both ground-based systems and space-based systems, as these types of systems vary greatly in their use cases and computational capabilities. We consider autonomous systems’ abilities with respect to situational awareness, context assessment, and design characterizations. We research ethical and computational ramifications of providing autonomous systems with situational or context-based data. We plan to document findings of current roles and responsibilities of autonomous systems, and how those roles might evolve. For example, as technology improves to allow for more frequent and accurate decisions, it may be more ethical to allow AI systems to operate completely autonomously rather than simply providing suggestions to human decision makers.

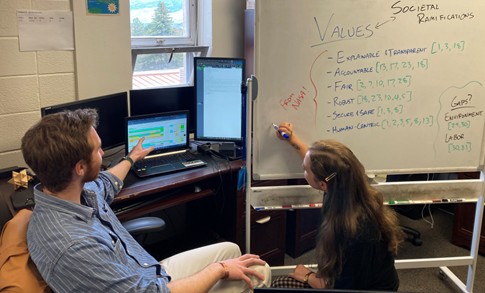

The second focus area of this research deals with societal ramifications of ethical decision-making models. We consider current methods of including multi-cultural perspectives in algorithm development, as well as current practices (if any) involving the prioritization/ranking/tradeoff between lives and property. We also consider the evolution of ethical problems that may face fully autonomous AI decision-making systems. As part of our research, we plan to identify possible next steps for incorporating ethical considerations in the development of artificial intelligence technology at NASA.

In summary, our team draws on expertise in the two relevant fields of machine learning and ethics/philosophy in technology to perform important exploratory research in ethical AI. This research will culminate in the preparation of two literature review journal papers: one outlining current and projected capabilities for autonomous computing, and one outlining current and suggested practices in considering societal ramifications in autonomous computing development. Our combined expertise gives us the ability to effectively study the capabilities, limitations, and social ramifications of current and near-future AI technology. Our research is providing key information to NASA personnel as they work to solidify their framework for the ethical use of AI and develop formal ethics standards for highly automated and autonomous systems.